创新背景

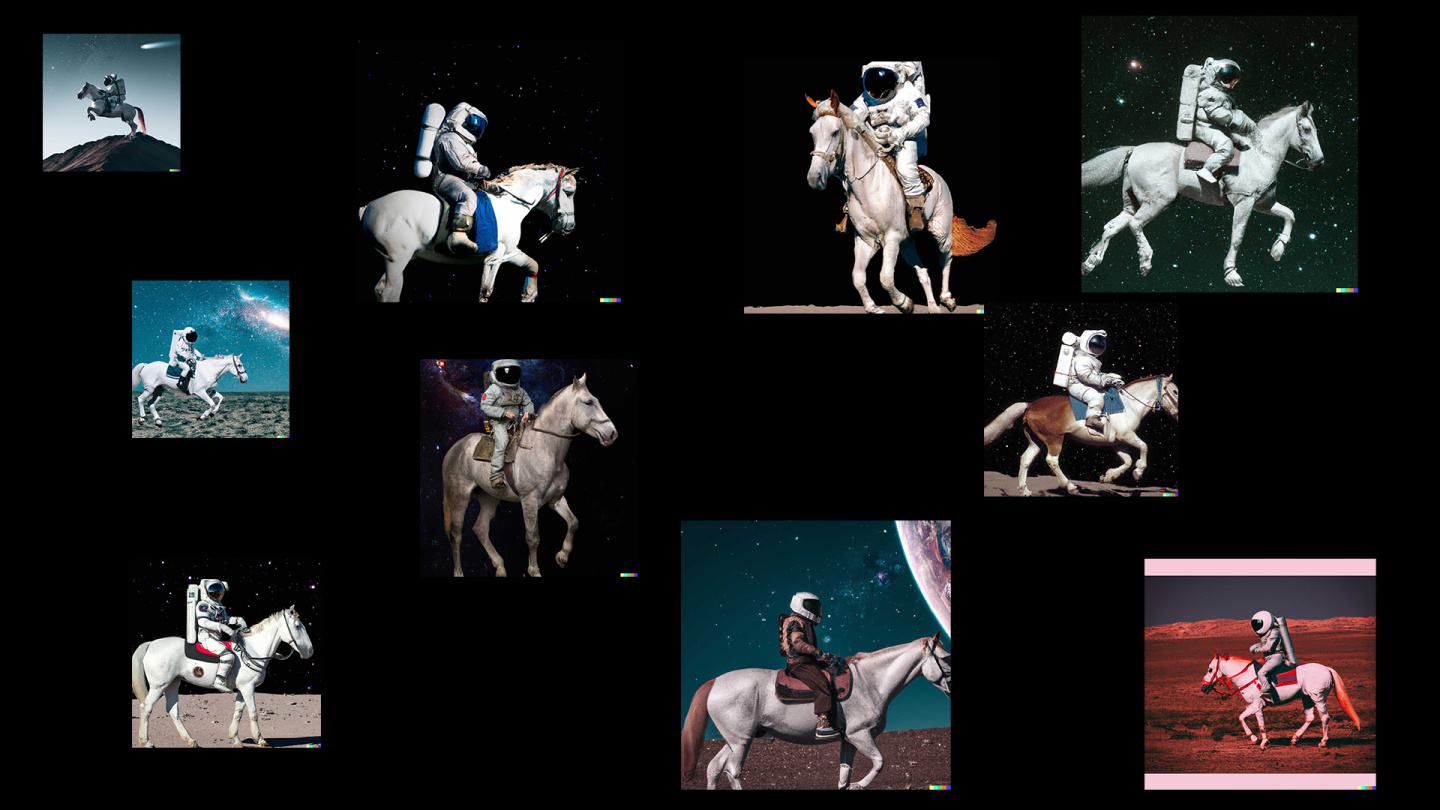

随着DALL-E的推出,互联网经历了一个集体感觉良好的时刻,DALL-E是一个基于人工智能的图像生成器,灵感来自艺术家萨尔瓦多·达利(Salvador Dali)和可爱的机器人WALL-E,它使用自然语言来产生你内心想要的任何神秘而美丽的图像。看到像“微笑的地鼠拿着冰淇淋甜筒”这样的输入立即栩栩如生,显然与世界产生了共鸣。

让屏幕上弹出微笑的地鼠和属性并不是一件小事。DALL-E 2使用称为扩散模型的东西,它尝试将整个文本编码为一个描述以生成图像。但是,一旦文本包含更多详细信息,单个描述就很难捕获所有内容。此外,虽然它们非常灵活,但它们有时很难理解某些概念的组成,例如混淆不同对象之间的属性或关系。

创新过程

为了更好地理解生成更复杂的图像,麻省理工学院的科学家们从不同的角度构建了典型模型:他们将一系列模型添加到一起,它们都合作生成所需的图像,根据输入文本或标签的要求捕获多个不同的方面。要创建具有两个组件的图像,例如,通过两句话来描述,每个模型将处理图像的特定组件。

图像生成背后的看似神奇的模型通过建议一系列迭代细化步骤来获得所需的图像。它从“粗糙”图片开始,然后逐渐细化它,直到它成为选定的图像。通过将多个模型组合在一起,它们共同优化每个步骤的外观,因此结果是一个显示每个模型所有属性的图像。通过让多个模型进行协作,就可以在生成的图像中获得更具创造性的组合。

以一辆红色卡车和一座绿色的房子为例。当这些句子变得非常复杂时,模型会混淆红色卡车和绿色房屋的概念。像DALL-E 2这样的典型生成器可能会制造一辆绿色的卡车和一座红色的房子,所以它会交换这些颜色。团队的方法可以处理这种类型的属性与对象的绑定,特别是当有多个事物集时,它可以更准确地处理每个对象。

该模型可以有效地对对象位置和关系描述进行建模,这对于现有的图像生成模型来说是一个挑战。例如,将对象和立方体放在某个位置,将球体放在另一个位置。DALL-E 2擅长生成自然图像,但有时很难理解对象关系。

可组合扩散 ( 该团队的模型 — 将扩散模型与合成运算符结合使用,无需进一步训练即可组合文本描述)方法比原始扩散模型更准确地捕获文本细节,后者直接将单词编码为单个长句子。例如,给定“粉红色的天空”和“地平线上的蓝色山峰”和“山前的樱花”,该团队的模型能够准确地产生该图像,而原始的扩散模型使天空湛蓝,山前的所有东西都是粉红色的。

虽然它在生成复杂、逼真的图像方面表现出色,但它仍然面临着挑战,因为该模型是在比DALL-E 2等数据集小得多的数据集上训练的,因此有一些物体根本无法捕获。

可组合扩散可以在生成模型(如DALL-E 2)的基础上工作,科学家们希望探索持续学习作为潜在的下一步。鉴于对象关系中通常会添加更多内容,他们希望了解扩散模型是否可以在不忘记以前学到的知识的情况下开始“学习”——到一个模型可以同时产生具有先前和新知识的图像的地方。

创新关键点

图像生成背后的看似神奇的模型通过建议一系列迭代细化步骤来获得所需的图像。它从“粗糙”图片开始,然后逐渐细化它,直到它成为选定的图像。通过将多个模型组合在一起,它们共同优化每个步骤的外观,因此结果是一个显示每个模型所有属性的图像。通过让多个模型进行协作,就可以在生成的图像中获得更具创造性的组合。

创新价值

这项研究提出了一种在文本到图像生成中组合概念的新方法,不是通过将它们连接起来形成提示,而是通过计算每个概念的分数并使用连词和否定运算符来组合它们。它利用了基于能量的扩散模型解释,以便可以应用使用基于能量的模型来围绕组合性的旧想法。该方法还能够利用无分类器的指导,它在各种构图基准上的表现优于GLIDE基线,并且可以定性地产生非常不同类型的图像生成。

The innovative "combinable diffusion" method can use multiple models to create more complex images

To get a better understanding of generating more complex images, the MIT scientists built typical models from different perspectives: They added together a series of models that all collaborated to generate the desired image, capturing multiple different aspects depending on the input text or label requirements. To create an image with two components, for example, described by two sentences, each model will deal with a specific component of the image.

The seemingly magical model behind image generation obtains the desired image by suggesting a series of iterative refinement steps. It starts with the "rough" picture and gradually refines it until it becomes the selected image. By combining multiple models together, they collectively optimize the appearance of each step, so the result is an image that shows all the properties of each model. By having multiple models collaborate, you can get more creative combinations in the resulting images.

Take a red truck and a green house, for example. When the sentences became very complicated, the model confused the concepts of a red truck and a green house. A typical generator like DALL-E 2 might make a green truck and a red house, so it swaps those colors. The team's approach can handle this type of property binding to objects, especially when there are multiple sets of things, so it can handle each object more accurately.

This model can effectively model object location and relationship description, which is a challenge for existing image generation models. For example, place objects and cubes in one location and spheres in another. DALL-E 2 is good at generating natural images, but sometimes struggles to understand object relationships.

The composable diffusion (the team's model, which combines a diffusion model with a synthesis operator to combine text descriptions without further training) approach captures text details more accurately than the original diffusion model, which directly encodes words into a single long sentence. For example, given a "pink sky" and a "blue peak on the horizon" and a "cherry blossom in front of a mountain," the team's model was able to produce that image accurately, whereas the original diffusion model made the sky blue and everything in front of the mountain pink.

While it performs well in generating complex, realistic images, it still faces challenges because the model is trained on much smaller datasets than, say, DALL-E 2, so there are some objects that simply cannot be captured.

Composable diffusion can work on the basis of generative models such as DALL-E 2, and scientists would like to explore continuous learning as a potential next step. Given that more content is often added to object relationships, they wanted to see if diffusion models could begin to "learn" without forgetting previously learned knowledge -- to a place where models could produce images with both previous and new knowledge.

智能推荐

计算机领域创新思维 | 花卉的在线DIY平台

2022-07-04通过搭建可以帮助消费者自行搭配花束的数字平台,增加消费者的选择范围,为花农提供新的销路。

涉及学科涉及领域研究方向